Tech moves fast, but you're still playing catch-up?

That's exactly why 100K+ engineers working at Google, Meta, and Apple read The Code twice a week.

Here's what you get:

Curated tech news that shapes your career - Filtered from thousands of sources so you know what's coming 6 months early.

Practical resources you can use immediately - Real tutorials and tools that solve actual engineering problems.

Research papers and insights decoded - We break down complex tech so you understand what matters.

All delivered twice a week in just 2 short emails.

A new international study has found that leading artificial intelligence assistants frequently misrepresent or mishandle news information. The research, published Wednesday by the European Broadcasting Union (EBU) in collaboration with the BBC, highlights growing concerns about the reliability of AI-powered tools as news sources.

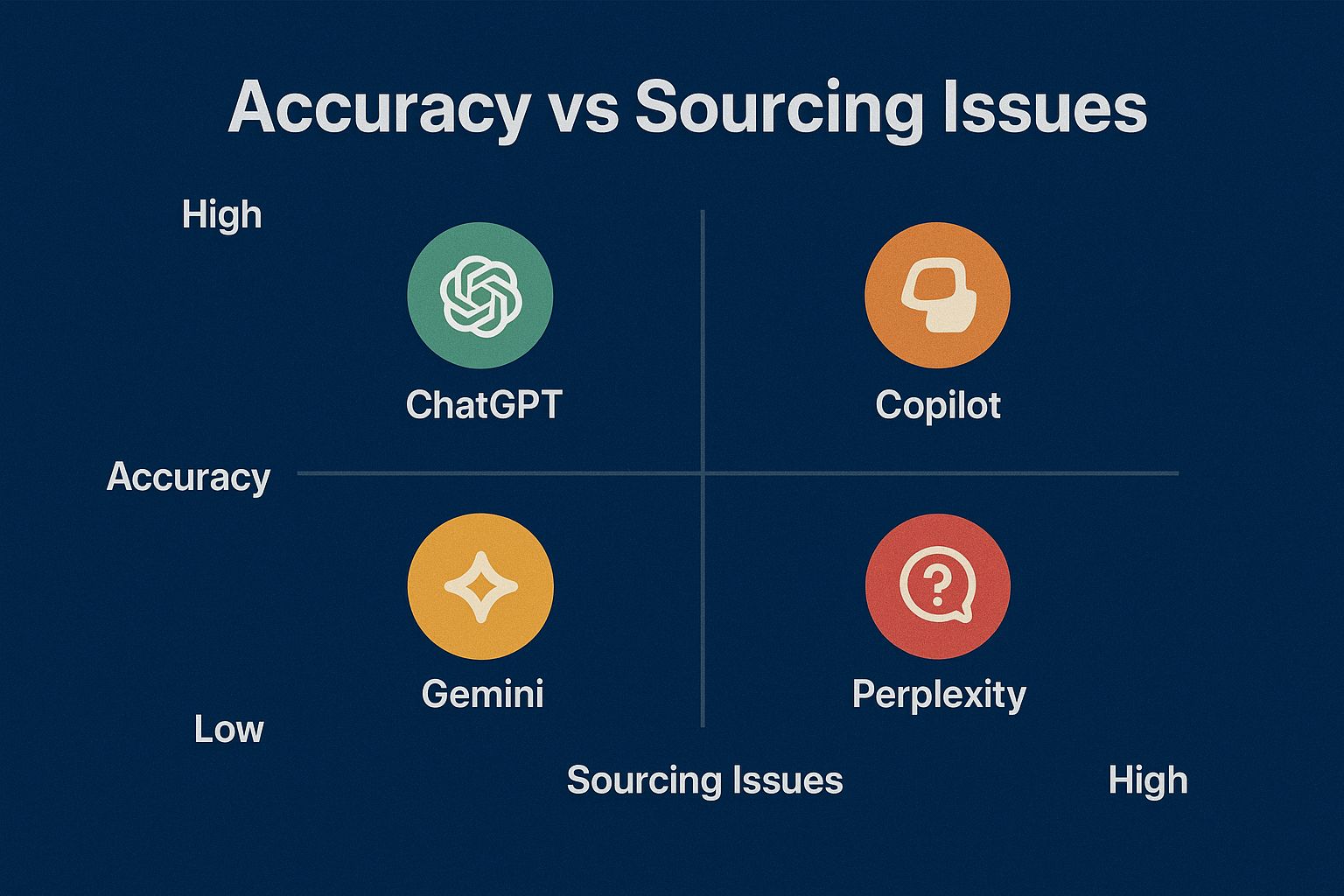

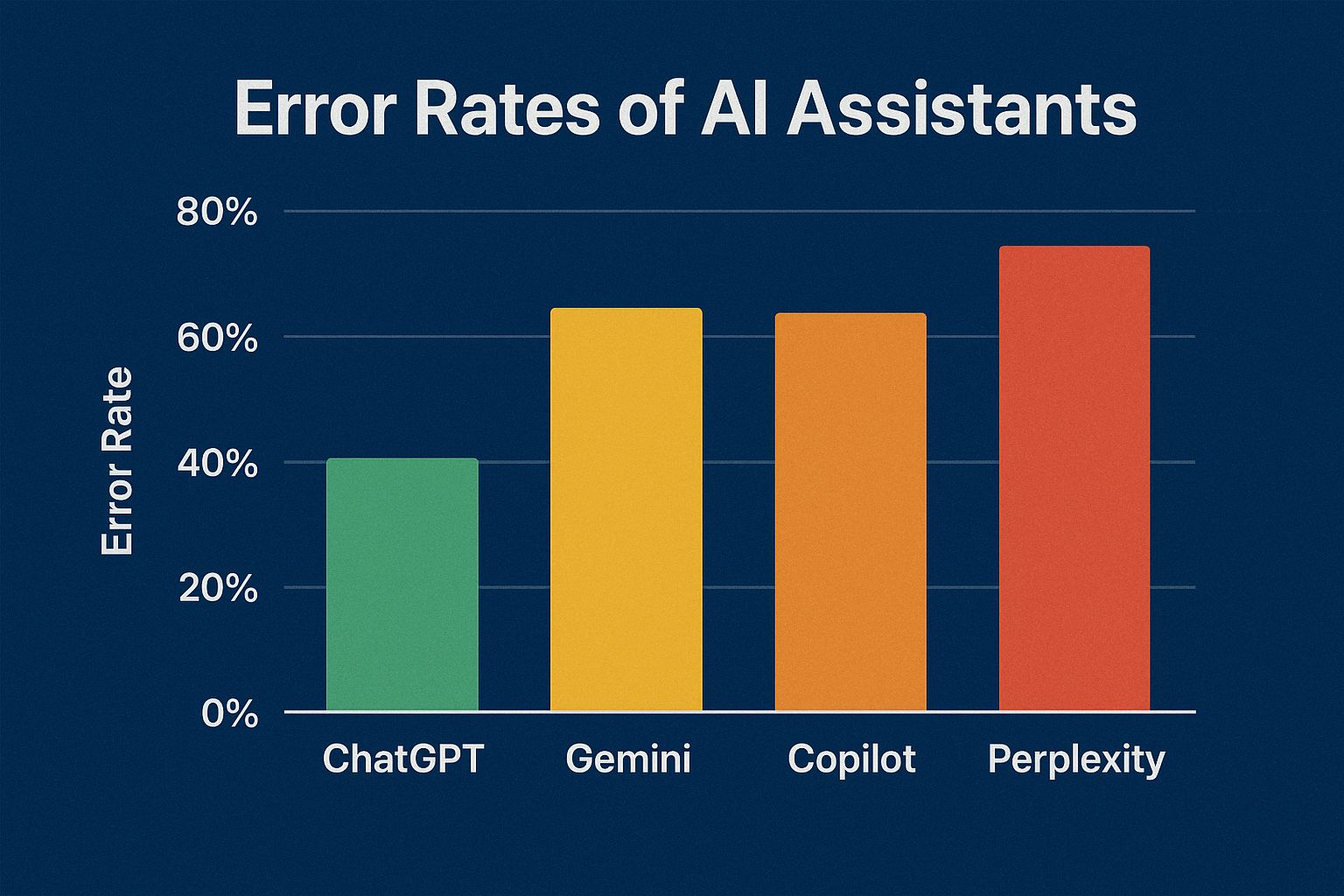

The study evaluated more than 3,000 responses from popular AI assistants — including ChatGPT, Copilot, Gemini, and Perplexity — across 14 languages. It assessed their performance based on accuracy, sourcing quality, and their ability to distinguish between factual reporting and opinion.

⚠️ Widespread Inaccuracies

According to the findings, 45% of AI-generated responses contained at least one major error, while 81% displayed some form of issue. These ranged from factual inaccuracies to outdated information and misleading attributions.

Sourcing proved to be a major weakness. Roughly one-third of responses showed significant citation errors, such as missing or incorrect sources. The report noted that Google’s Gemini had the highest rate of sourcing issues, affecting about 72% of its responses, compared with less than 25% for other AI models tested.

📉 Examples of Errors

Among the examples cited were Gemini’s incorrect claims about legislative changes on disposable vapes and ChatGPT referencing Pope Francis as alive months after his reported death. The study also identified outdated or contextually misleading information across several platforms.

🗣️ Industry Reactions

While none of the major companies have yet issued an official statement on the study, previous remarks suggest ongoing efforts to improve.

Google has stated it welcomes user feedback to refine Gemini’s accuracy and usefulness.

OpenAI and Microsoft have acknowledged the problem of “hallucinations” — when AI generates incorrect information — and are working to reduce their frequency.

Perplexity claims its “Deep Research” feature maintains 93.9% factual accuracy, according to its official website.

🌍 Global Research Effort

The investigation involved 22 public-service media organizations from 18 countries, including France, Germany, Spain, Ukraine, Britain, and the United States. Researchers warned that the growing reliance on AI assistants for news could threaten public trust in journalism.

“When people don’t know what to believe, they often end up believing nothing at all — and that can weaken democratic participation,” said Jean Philip De Tender, EBU’s Media Director.

📊 Growing Use Among Young Audiences

According to the Reuters Institute’s Digital News Report 2025, 7% of online news consumers — and 15% of those under age 25 — already rely on AI assistants for their daily news.

The report calls for greater accountability among AI developers and stricter standards for accuracy and transparency in news-related responses.

Stay updated with the latest insights—subscribe to our newsletter for real-time market analysis!